First concepts of Artificial Intelligence

Before we even had the technology to do so, science fiction portrayed AI in many forms, from the tin man in the Wizard of Oz to the humanoid robot that impersonates Maria in Metropolis. By the 1950s, we had a generation of scientists, mathematicians and philosophers with the concept of artificial intelligence culturally assimilated in their minds.

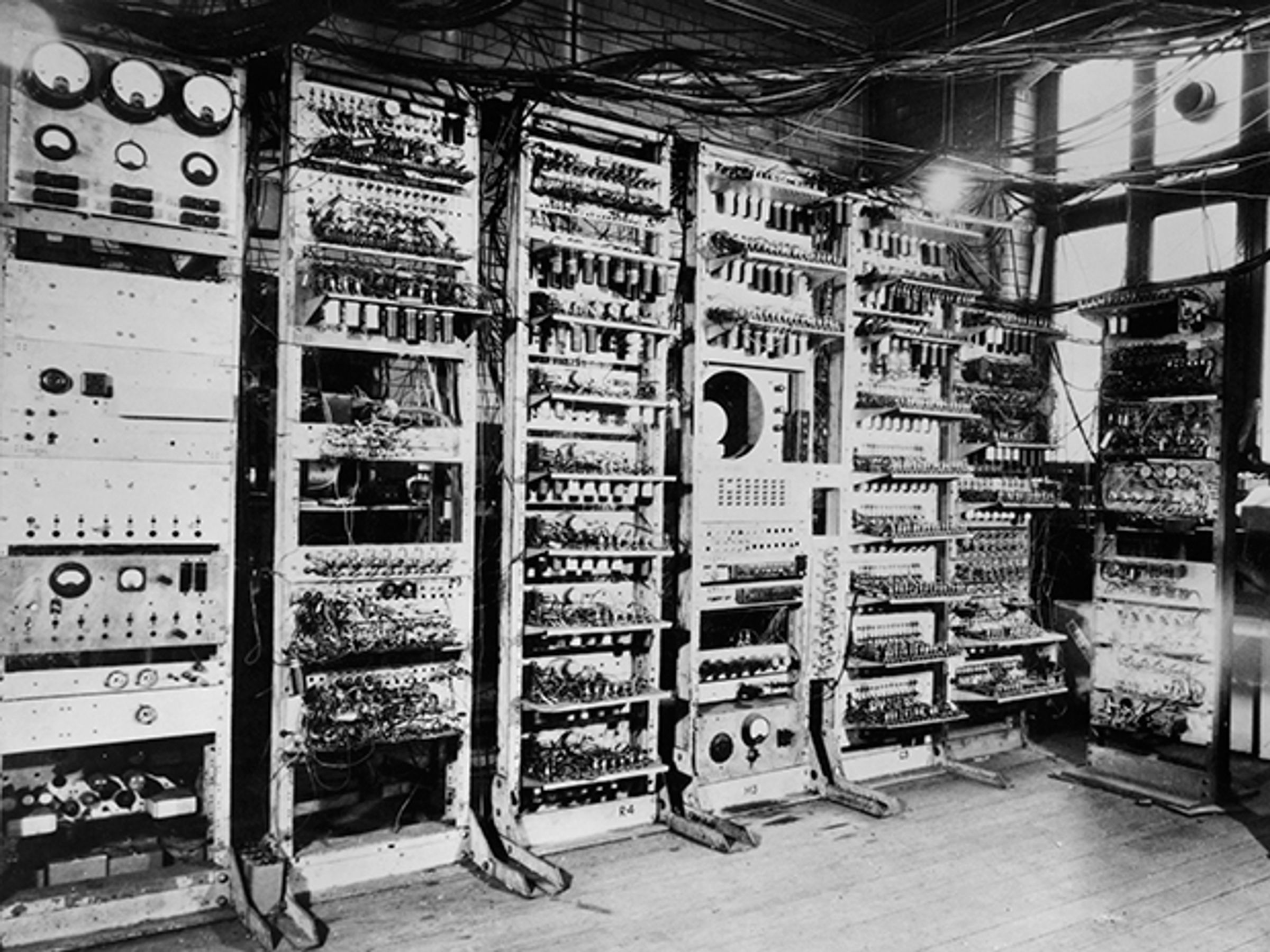

Alan Turing was a English mathematician that explored the mathematical possibility of Artificial Intelligence. He suggested, based on the fact that humans use available information as well as reason in order to solve problems and make decisions, that machines can also make decisions based on the same parameters. This was the logical framework of his 1950 paper, Computing Machinery and Intelligence in which he discussed how to build intelligent machines and how to test their intelligence.

Alan Turing was a English mathematician that explored the mathematical possibility of Artificial Intelligence. He suggested, based on the fact that humans use available information as well as reason in order to solve problems and make decisions, that machines can also make decisions based on the same parameters. This was the logical framework of his 1950 paper, Computing Machinery and Intelligence in which he discussed how to build intelligent machines and how to test their intelligence.

But there was just a few set backs at the time, one of them being the fact that computers lack the key prerequisite for intelligence: they could not store commands, only execute them. In other words they could do what they were told, but they could not remember what they did. Another key factor, computers were still very expensive.

Source: IEEE Spectrum

The big conference

Five years later, the proof of concept was initialized by Allen Newell, Cliff Shaw and Herbert Simon. They created a program called the Logic Theorist. It was designed to mimic the problem solving skills of a human. It’s considered by many to be the first artificial intelligence program and was presented at the Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI) hosted by John McCarthy and Marvin Minsky in 1956. But this historic conference fell short of expectations, with many people attending there was a failure to agree on standard methods for the field. But despite the lack of agreement one aspect was of general consensus; that Artificial Intelligence was achievable.

The following years

Between 1957 and 1974, Artificial intelligence flourished. Computers now could store more information and they became cheaper and faster. Early demonstrations such as of Newell and Simon’s that showed the promises of Artificial intelligence convinced government agencies such as the Defense Advanced Research Projects Agency (DARPA) to fund AI research at several institutions. Optimism was high and expectations were even higher. Marvin Minsky told Life Magazine, in 1970, “from three to eight years we will have a machine with the general intelligence of an average human being.” However there was still a long way to achieve it.

IBM 350 disk storage unit – The IBM 350 Disk Storage Unit was rolled out in 1956 to be used with the IBM 305 RAMAC to provide storage capacities of five, 10, 15 or 20 million characters. It was configured with 50 magnetic disks containing 50,000 sectors, each of which held 100 alphanumeric characters.

Even with such advancements in computational power and storage it was still not enough. In order to communicate, for example, computers need to store the meaning of many words and understand them in many combinations and there was just not enough storage for such large data. With patience slowly decreasing, so did the funding which lead to a slow research for the next 10 years.

In the 80’s, Artificial Intelligence saw a renewed interest, with an expansion of the algorithmic toolkit and therefore an increased funding. John Hopfield and David Rumelhart popularized “deep learning” techniques which allowed computers to learn using experience. At the same time Edward Feigenbaum introduced expert systems which mimicked the decision making process of a human expert. The program would ask an expert of a specific field how to respond in various specific situations and once there was enough data collected, people that were not from that area of expertise could get advice from that program.

With a new light, Artificial Intelligence started getting serious funding. The Japanese government heavily funded expert systems and other Artificial Intelligence related endeavors as part of their Fifth Generation Computer Project (FGCP). From 1982-1990, they invested $400 million dollars with the goals of revolutionizing computer processing, implementing logic programming, and improving artificial intelligence. But once again the expectations were to high and not achieved and research fell through.

The big achievements

Although, even with the lack of funding Artificial Intelligence flourished. In the 1990s many of the milestones were achieved. In 1997, world chess champion and grandmaster Garry Kasparov was defeated by IBM’s Deep Blue. This was the first time a computer, running an Artificial Intelligence program, beat a world champion chess player. While at the same time speech recognition software, developed by Dragon Systems, was implemented on Windows and even human emotions were being tackled by Cynthia Breazeal with her Kismet robot. It appeared that there wasn’t any problem a machine could not handle.

Peter Morgan Reuters

Moore’s law

Moore’s Law states that the number of transistors on a microchip doubles every two years. The law claims that we can expect the speed and capability of our computers to increase every two years because of this, yet we will pay less for them. Another tenet of Moore’s Law asserts that this growth is exponential. This was a crucial step towards Artificial intelligence. With more computational power and more storage we could have more complex programs.

The era of “Big Data”

We now live in an era in which we have the capacity to collect huge amounts of information, such amounts that is to much for a human to process, in a reasonable time that is. The use of Artificial Intelligence in processing this kind of information has shown crucial in many different industries. Take Netflix for example, when you log in to you account and you get suggestions curated for you, that is Artificial Intelligence processing tons of information, all based on what you have watched before and what you have liked, being compared to their vast database of movies and series. And it does so for millions of users all around the world. This could never be done by humans. That is just one of many examples on how AI has been helping processing incredible amounts of information.

What to expect from Artificial Intelligence

It is hard to tell what may come next, but we can expect that some technologies that are already in place will advance and become part of our daily lives even more. We can expect to see more self driving cars, more complex language AI in such that you will be able to have a fluent conversation. The goal seems to be to develop general intelligence, a machine that surpasses human cognitive abilities in all tasks.

Ethical AI

The application of AI can help organizations operate more efficiently, produce cleaner products, reduce harmful environmental impacts, increase public safety, and improve human health. But if used unethically – e.g., for purposes such as disinformation, deception, human abuse, or political suppression – AI can cause severe deleterious effects for individuals, the environment, and society.

The increase in automated data-driven decision-making provides a wealth of opportunities to hold everyone to higher standards than ever before, and to eradicate discrimination from our economic and social systems. That is why it is imperative to develop repeatable frameworks and processes in order to enable accountability, reproducibility, and scalability.

AI for Good Foundation Ethics

Laws and regulations are generally insufficient to ensure the ethical use of Artificial Intelligence. It is necessary for individuals and organizations who use AI – as well as those who develop and provide AI tools and technology – to practice ethical AI. Users of Artificial intelligence must take proactive steps to make sure they are using AI ethically. This obligation goes beyond issuing statements; there must be specific policies that are actively enforced.

AI for Good Foundation is setting the standard for AI Ethics best practices in company, classroom, and policy settings. Our work is informed by the UN Sustainable Development Goals — and the human rights they champion.

Learn more about our AI & Ethics

Source: Harvard SITN